This article's idea came up from a presentation that I attended at NgPoland 2023 - Matt Lewis - Making Development Times Fast With Esbuild. If you don't have access to it, I recommend checking out https://github.com/clickup/ngx-esbuild

Starting a project from scratch will always give you a sense of freedom and the urge to do it right from the first time. Only clean code, only the latest and greatest tools, separation of domain because everything is so clear, right?

Managing your code base is like tending your garden. At first, you're excited, you watch your garden grow more and more. You invest time and feelings into it.

But life happens - Reality happens. You have other priorities, the garden may not be that important anymore or you've passed some of the responsibilities to someone else who's probably not as caring as you are.

The garden gets bigger and bigger and at some point, you see that your plans start growing very slowly or worse, wither.

The same happens with the code base. The business grows, the developer teams grow and you get to wear other hats inside the project.

If something goes wrong, it would be hard to pinpoint when and what exactly went wrong if you're not keeping track of changes.

Monitoring the Angular local build times

One of the problems that I've encountered on big projects is the local build time. It's something that at some point you will hear developers complaining about but nobody knows exactly why and when it started.

That's why, I believe that monitoring can help you big time in these scenarios, especially if you try to prevent getting in those situations.

The approach that I took in this article is a bit more bare-bone, thinking of how I could reuse some parts of the logic, that's why I've taken the shell path. Or maybe I needed a challenge as shell is probably not one of my strongest points.

What do we want to monitor?

In our cases, we have two metrics:

INITIAL_LOAD- The initial build time, when you runng serveRELOAD- The build time when you change something and save

Understand the logs and how we get the metrics

Fortunately, Angular's logs are easy to read. All the logs will have this format.

main.js | main | 12.52 kB |

...

| Initial Total | 16.67 kB

Lazy Chunk Files | Names | Raw Size

chunk-IUFG73SY.js | - | 55.65 kB |

...

Application bundle generation complete. [2.302 seconds]

➜ Local: http://localhost:4200/

Watch mode enabled. Watching for file changes...

Initial Chunk Files | Names | Raw Size

main.js | main | 12.52 kB |

...

| Initial Total | 16.67 kB

Lazy Chunk Files | Names | Raw Size

chunk-IUFG73SY.js | - | 55.65 kB |

...

Application bundle generation complete. [0.299 seconds]

Reloading client(s)...

All we have to do is get all the lines that contain Application bundle generation complete. [2.302 seconds] and extract [2.302 seconds] from it.

How do we figure out which is INITIAL_LOAD and RELOAD?INITIAL_LOAD will always be the first one that will be logged. Whatever comes after is RELOAD.

The logs will be cleared after each run.

Setup

- Change the script that

npm startwill execute.

In package.json, you will need to replace npm start with the shell script that will be executed

"scripts": {

...

"start": "sh serve.sh",

...

},

- Create

serve.sh

The script will create the log file if it doesn't exist and will execute sh match-script.sh when ng serve is terminated. This step can be done in many more ways and we will go with trap for this example.

# Change permission for match-script.sh to be executable

chmod +x match-script.sh

# Define the path to the log file

LOG_FILE="ng_serve.log"

# Define the script you want to run

MATCH_SCRIPT="sh match-script.sh"

# Check if the file exists

if [ ! -f $LOG_FILE ]; then

# If the log file doesn't exist, create it and allow write

touch $LOG_FILE

chmod +x $LOG_FILE

fi

echo "START MONITORING AND BUILD ANGULAR DEV"

# When ng serve is terminated, run $MATCH_SCRIPT

trap "$MATCH_SCRIPT" 1 2 3 6

# Run the Angular CLI `ng serve` command

ng serve | tee $LOG_FILE

trap will execute a given script when any of the given signals are received

1, 2, 3 and 6 are the signals SIGHUP, SIGINT, SIGQUIT, and SIGABRT. These signals will cover most of the cases when the ng serve script is terminated.

tee will just log the output of ng serve to the log file

- Create

match-script.sh

This script will handle finding the build times.

# LOG_FILE is the file that contains all the logs

LOG_FILE="ng_serve.log"

# BUILD_TIMES_FILE is the file that contains the build times

BUILD_TIMES_FILE="build-times.log"

echo "Save build times"

if [ ! -f $BUILD_TIMES_FILE ]; then

# If the log file doesn't exist, create it

touch $BUILD_TIMES_FILE

fi

# Read the file contents

file_contents=$(cat $LOG_FILE)

# Extract the build time using regular expression

reloadTime=$(echo "$file_contents" |

grep -o '\d*\.\d* seconds' |

grep -o '\d*\.\d*')

initialLoad=$(echo "$timing" | head -1)

initialLoad=$(echo "{\"message\":\"INITIAL_LOAD\",

\"type\": \"INITIAL_LOAD\",

\"time\": $initialLoad} ")

# Skip the first line of the timing output

reloadTime=$(echo "$reloadTime" | tail -n +2)

# Add [RELOAD TIME] for each row in timing

reloadTime=$(echo "$reloadTime" | while read line;

do echo "{\"message\":\"RELOAD\",

\"type\": \"RELOAD\",

\"time\": $line}, ";

done)

After setting up the data, we will need to pattern-match [2.302 seconds] and then match only 2.302. This is done using the grep command.

# Extract the build time using regular expression

reloadTime=$(echo "$file_contents" |

grep -o '\d*\.\d* seconds' |

grep -o '\d*\.\d*')

As we mentioned previously, the INITIAL_LOAD will be the first line and we can get that using the head command.

Everything after that can be considered RELOAD.

Our output will have the JSON format and it can be easily interpreted by others

{"message":"RELOAD", "type": "RELOAD", "time": 0.155},

{"message":"INITIAL_LOAD", "type": "INITIAL_LOAD", "time": 2.302}

Send logs to Datadog

Generating the data - Solved.

Now what are we going to do with it?

For the data to make any sense, we should centralize it to a platform where we can do some data visualization. There are many tools to choose from.

I've chosen Datadog because is the most familiar to me. If you have other preferences, go ahead. The steps should be similar.

I'm not going to enter the details of how to set up Datadog. You can check their documentation because you will find everything there.

Datadog offers an API where you can send logs as an array so it's very easy to send batch logs. This can be done through a curl command.

# match-script.sh

...

curl -X POST "https://http-intake.logs.datadoghq.eu/api/v2/logs" \

-H "Accept: application/json" \

-H "Content-Type: application/json" \

-H "DD-API-KEY: <<DD-API-KEY>>" \

-d @- << EOF

[

$reloadTime

$initialLoad

]

EOF

Depending on you're region, the URL might be different. I recommend consulting the API here

You will need to get the Datadog API Key from your Datadog - Access - API Keys and replace it in <<DD-API-KEY>> .

The inversion of $initialLoad with $reloadTime in the array is just for the commodity to avoid extra logic to avoid having the trailing comma at the end of the JSONs.

From now on, you can visualize your logs inside Datadog. You can add extra parameters or whatever fits relevant in your scenarios

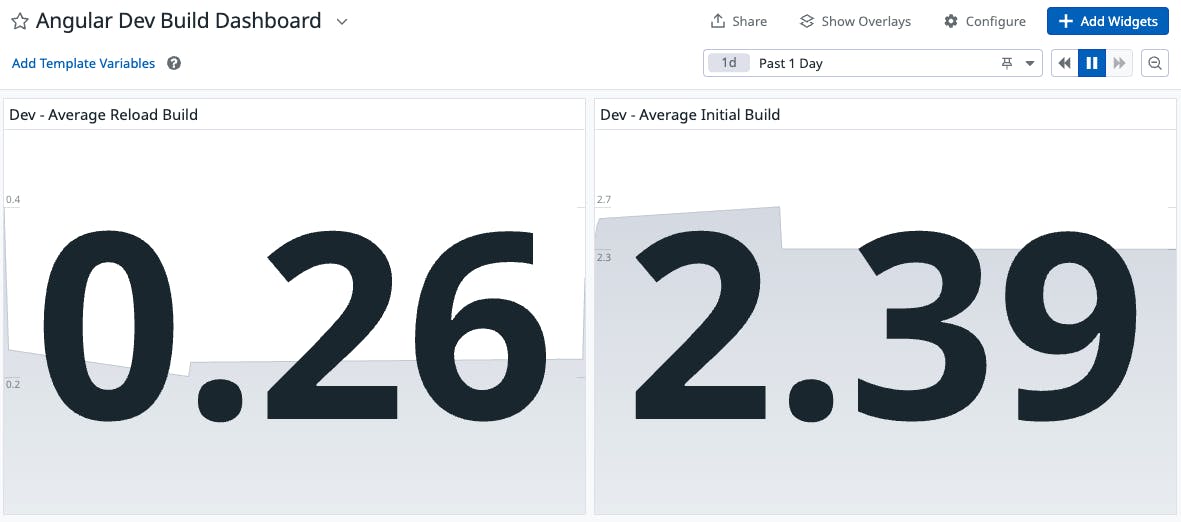

You can also build some average values of your initial loads and reload using dashboards and you can observe the values over time and identify when the build time started to get out of control.

Monitoring from an early stage of development will allow you to react faster when the development experience degrades.

Also, once you have the when, you can identify the what and take the necessary steps to improve that pain point.

For me, it was fun to write everything in Shell. It extended my usual day-to-day work in other High-Level languages.